Artificial Intelligence: How to Ensure its Security?

How AI is reshaping cybersecurity?

This post is the third of our series about Artificial Intelligence (AI) in cybersecurity.

In previous articles, we explored the different usages of AI for offensive and defensive applications: on the one hand, hackers are now using AI to orchestrate large scale attacks, making offensives techniques faster and more difficult to detect and respond to. On the other hand, defensive applications are also emerging, as the trust in AI slowly increases. Threat detection technologies can now rely on powerful techniques based on AI and Machine Learning (ML) to analyze large and complex data. And while traditional detection systems can only defend against known attacks, another great advantage of AI- algorithms is that they can detect anomalies and new unknown attacks, and therefore better keep up with the ever-growing number of threats.

Why AI should be protected?

As AI makes its way in the security ecosystem, not only in the cloud but also in the embedded world and in personal computers, new threats are emerging: attacks that target AI systems themselves.

Indeed, the omnipresence of AI algorithms makes them a target of choice for attackers, for multiple reasons:

– AI is used in safety-critical systems like self-driving cars that rely on image recognition to compute their trajectory.

– It is also used in protection mechanisms like IDSs, anti-viruses, etc.

– AI sometimes manipulates private and sensitive data.

– Lastly, AI models themselves are valuable assets and can be the target of intellectual property theft.

Just like cryptographic algorithms, AI and machine learning models must be designed to be robust against various types of attacks. MITRE has released a classification of such threats called ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems) serving as a knowledge base for AI security, referencing attacks and use-cases.

Adversarial Machine Learning

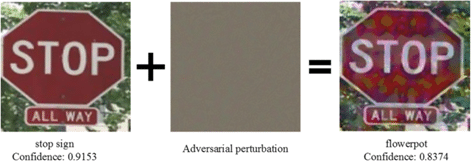

Adversarial attacks mostly target image recognition systems and consist in creating adversarial examples to use as input of the system. Adversarial examples are typically images with small perturbations that prevent a machine learning model to analyse them correctly. Those perturbations may look invisible to the human eye but could impact the safety of a vehicle if a road sign was not identified correctly (a stop sign, for example).

Adversarial input, from [1]. The stop sign is mistook for a flowerpot because of the adversarial perturbation.

Several studies of this type have been published on automotive ADAS systems. It has also been demonstrated that using small patches or by projecting images on the road with a drone, automatic driving system of Tesla cars could no longer recognize their environments [2, 3].

While such scenarios may seem unpractical for an attacker, it is important to note that adversarial data samples can be crafted to evade any type of AI-based detection application (not only image recognition systems), if the underlying model is not robust enough: malware detection applications, network intrusion detection systems, etc. To prevent such attacks, it is necessary to harden the models, generally during the training phase using adversarial training methodologies whose objective is to make the model secure against adversarial examples.

A wide scope of threats

The term “Adversarial Machine Learning” has then been used to describe the global study of threats against Machine Learning systems, which includes various types of other attacks. Here is an overview of the main different threats.

Data poisoning.

The performance of ML models is dependent of their training data and of the training method that is used to shape them. Data poisoning attacks aims at inserting wrong data samples in the training dataset, to manipulate the model behavior at runtime. It is possible for internal or external actors to insert those backdoor by corrupting some dataset sample values and/or labels.

Fault Injection Attacks (FIA).

Machine learning systems can also be the target of physical attacks. With a fault injection attack, it was shown that it is possible to skip instruction in the activation functions of deep neural networks with laser injection and therefore modify the output of the ML system [4]. Model parameters weights can also be target of FIA, using cheaper injection means like clock glitching.

Side Channel Attacks (SCA) and Reverse Engineering

Side channel analysis has also been used to spy on private data of AI systems: for example, on the input images of an AI accelerator through power analysis [5], or to extract the weights of neural network through EM emanation from a processor like Cortex M7 [6]. The outcome of the latter attack is completely different from the above-mentioned evasion examples: here, the objective is more about reverse engineering the architecture and/or the internal parameters of the model in order to clone it, which constitutes an infringement of the AI Intellectual Property. This type of threat concerns mostly IP protected AI where model parameters are kept secret, which is also often the case in AI as a Service (AIaaS) applications.

Attacks on AIaaS

Indeed, many AI applications offer their functionalities as cloud services, the most common being Large Language Models (LLM) and particularly ChatGPT. In that case, AI models are black boxes that can be queried from remote to produce an output which is sent back to the user. In such a context, AI model parameters and training data are valuable assets that are therefore kept secret and unknown to the user. The training data is also sensitive as it may contain private or personally identifiable information (PIIs).

Those AIaaS applications are targeted by different types of attacks: model extraction or cloning, as discussed, as well as model inversion attacks and the recent Membership Inference Attacks (MIA) which aim at obtaining information about the training data of a model. MIAs query the model to determine from its output whether a particular data sample was used during its training or not, while model inversion attacks downright aim at reconstructing the entire training dataset.

Lastly, it is also possible to create Denial of Service attacks on AIaaS systems by submerging them with large amounts of request of by crafting specific inputs that cause the ML system to use a lot of resources.

Threats and vulnerabilities trends for 2024.

2023 and 2024 saw the rise of ChatGPT, and inevitably of the associated risks. The language model has now more than 180 million users, and is being used daily by engineers, developers, etc. for their work. First, there has been concerns that ChatGPT would be used by hackers to automatically craft malicious phishing emails that would be impossible to distinguish from one written by a human. Even more concerning, it was discovered that cybercriminals were using ChatGPT to create new hacking tools and malware, despite some protections present in the tool to avoid those malicious usages. Lastly, private data of users, including chat history has already leaked because of a vulnerability in the Redis library.

All those recent events have helped the industry to become more aware of the security risks related to the massive usage of Artificial Intelligence, and a lot of companies have banned the usage of tools like ChatGPT. By extension, AI leaders should also become more conscious of the variety of attacks targeting AI systems.

Lastly, in 2023, ATLAS Mitre has expanded its database to include a classification of mitigations for adversarial machine learning. Researchers and industrials can now rely on this knowledge base to choose appropriate countermeasures for their AI applications. However, AI still need to be tested thoroughly to ensure that it is robust against a given threat.

Secure-IC solutions on this context

Secure-IC provides the Laboryzr™, a complete evaluation platform to test the robustness of devices and designs against Side Channel Attacks (SCA) and Fault Injection Attacks (FIA). With this complete laboratory, it is possible to test physical devices, RTL designs or software binaries against SCA and FIA, including AI and machine learning implementations. Indeed, the Laboryzr™ tools contains methods for checking any sensitive data leakages and allows to inject faults on any type of device or design. In particular, the pre-silicon Virtualyzr™ evaluation tool simulate next generation of AI-based application for system-on-chip regarding fault perturbation sensitivity. The side channel capabilities of t the Analyzr™ platform can be used to test for leakages of a physical system, and also for reverse engineering the internals of a chip extracting the weights of neural network through EM emanation, for example. Besides, with the Catalyzr™ tool which focuses on software security, we can instrument compiled binaries to simulate instruction skip and security verification bypass of neural networks, for example. Finally, Secure-IC also offers side channel and fault injection evaluation as services.

[1] Wu, Fei, et al. “Defense against adversarial attacks in traffic sign images identification based on 5G.” EURASIP Journal on Wireless Communications and Networking 2020.1 (2020): 1-15.

[2] Tencent Keen Security Lab. “Experimental security research of Tesla autopilot.” Tencent Keen Security Lab (2019).

[3] Nassi, Ben, et al. “Phantom of the adas: Phantom attacks on driver-assistance systems.” Cryptology ePrint Archive (2020).

[4] Breier, Jakub, et al. “Deeplaser: Practical fault attack on deep neural networks.” arXiv preprint arXiv:1806.05859 (2018).

[5] Wei, Lingxiao, et al. “I know what you see: Power side-channel attack on convolutional neural network accelerators.” Proceedings of the 34th Annual Computer Security Applications Conference. 2018.

[6] Joud, Raphaël, et al. “A Practical Introduction to Side-Channel Extraction of Deep Neural Network Parameters.” International Conference on Smart Card Research and Advanced Applications. Cham: Springer International Publishing, 2022.

Author: Thomas Perianin

Do you have questions on this topic and on our protection solutions? We are here to help.

Contact us